Template-based Data Generation

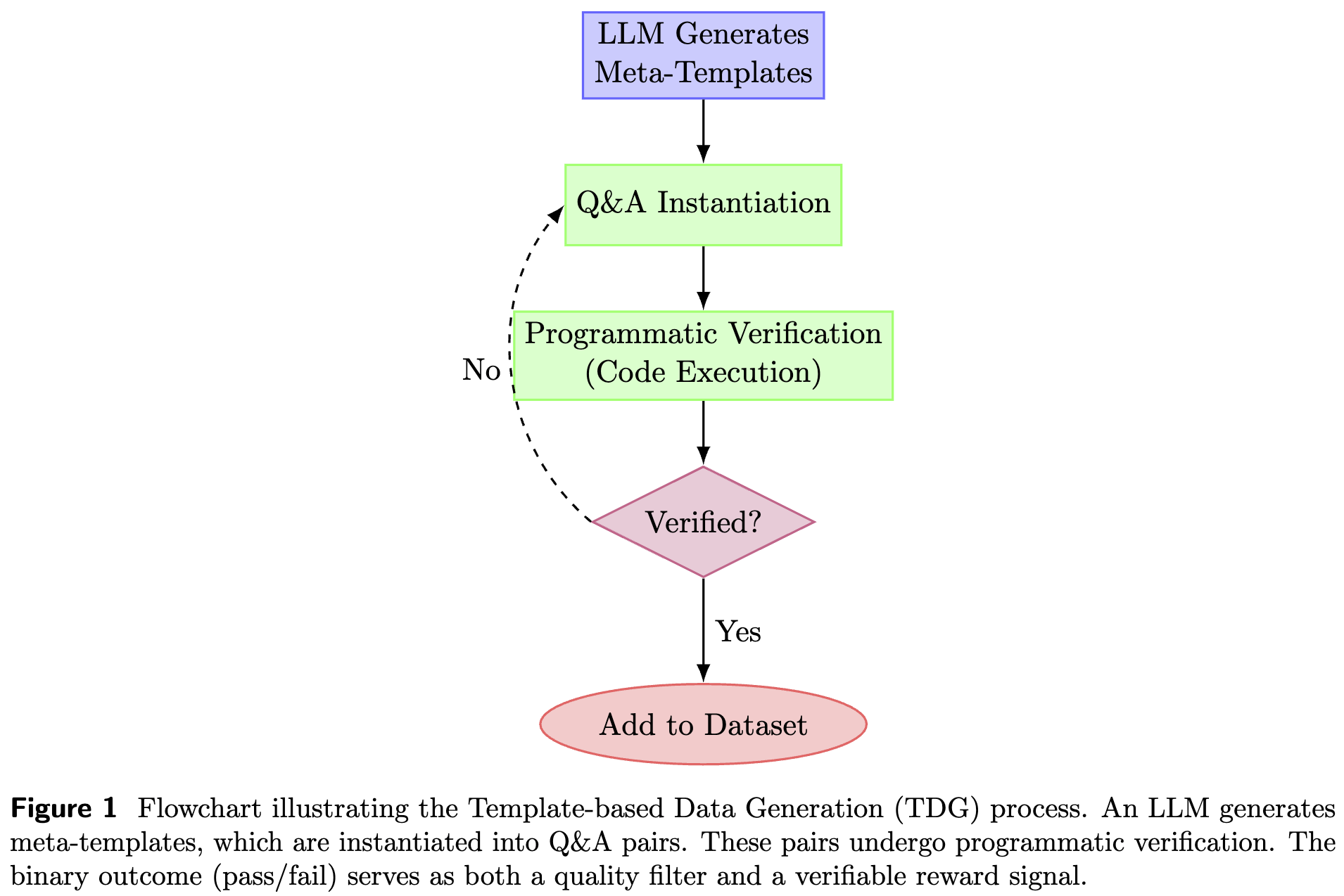

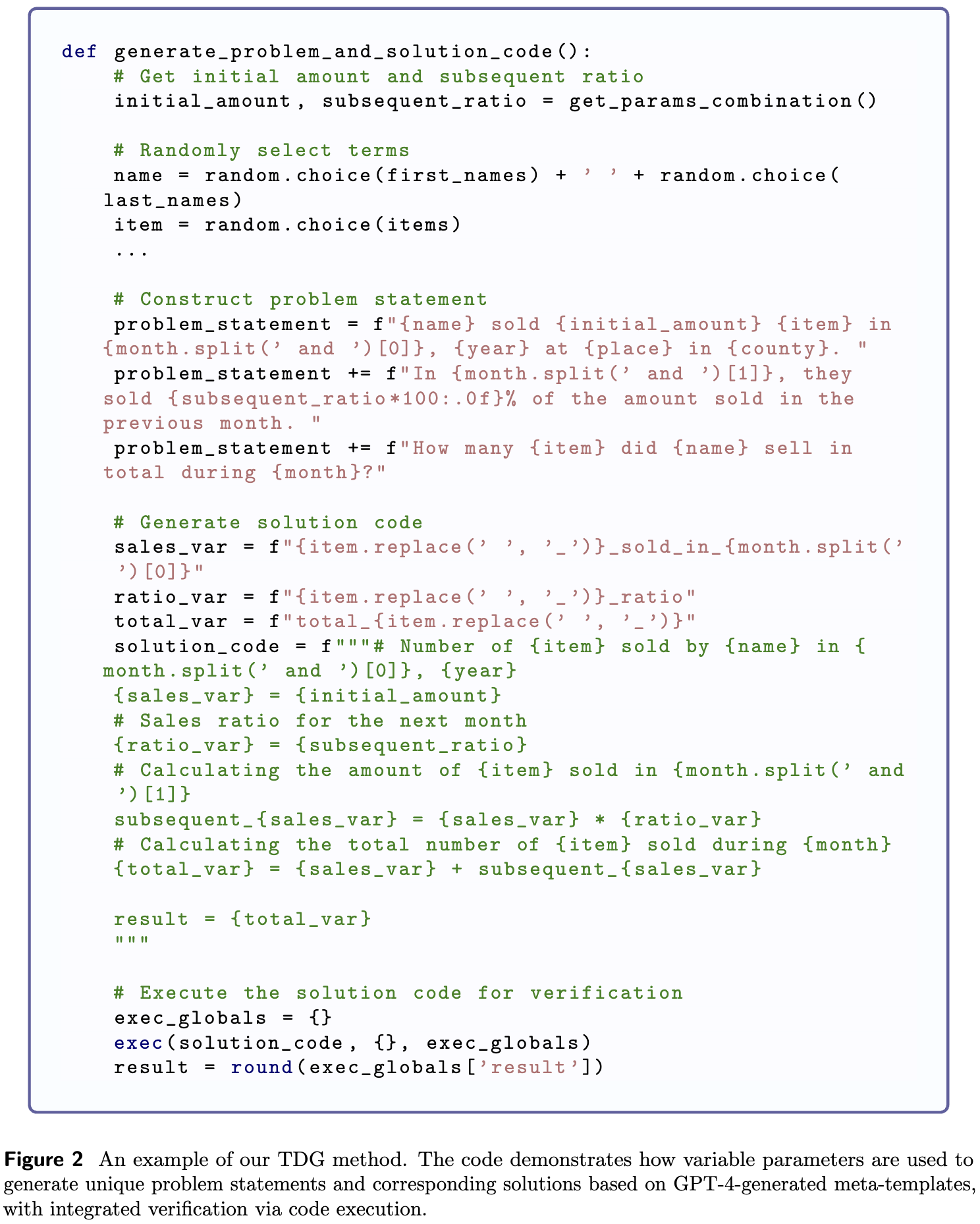

Template-based Data Generation (TDG) is a method designed to systematically produce a vast array of mathematical problems along with their corresponding solutions by leveraging parameterized templates. To elevate data augmentation to a new level, we employ GPT-4 to generate these meta-templates, capturing a wide variety of problem structures and linguistic styles. By varying parameters within these GPT-4-generated templates, TDG ensures both scalability and quality in the generated data. This approach enables the creation of diverse and complex problem sets, which are essential for training and evaluating large language models in mathematical reasoning tasks.

Main Contributions

Template-based Data Generation (TDG): We introduce TDG, a scalable method for generating an effectively infinite amount of high-quality, domain-specific data using parameterized templates generated by GPT-4.

Elevated Data Augmentation: By leveraging GPT-4 to create meta-templates, we advance data augmentation to a new level, ensuring a diverse and rich set of problem structures for data synthesis.

Creation of TemplateGSM Dataset: We develop TemplateGSM, a dataset comprising over 7 million synthetically generated math problems with verified solutions, addressing the scarcity of large-scale mathematical datasets.

Enhancement of LLM Performance: We demonstrate the efficacy of the generated data for pre-training, fine-tuning, and evaluating LLMs, enhancing their performance in mathematical reasoning tasks.

Precise Supervision through Code Execution: We provide insights into how TDG offers precise supervision through code execution and verification, promoting the development of models with improved understanding and problem-solving abilities.